🚀 Unlock AI-Driven Growth with BhuviAI

AGENTIC AI TECHNOLOGY SOLUTIONS | ENTERPRISE PLATFORMS | GEN-AI INNOVATION

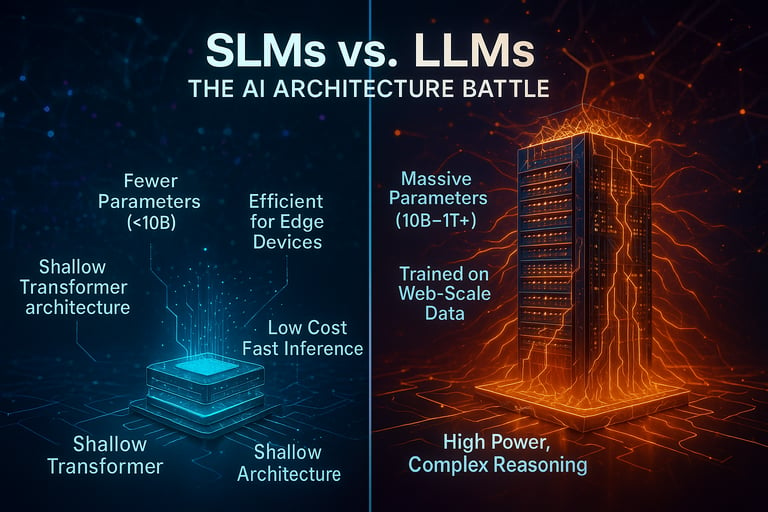

The Architecture Battle - SLM vs LLM

In the rapidly evolving AI landscape, the debate between Specialized Language Models (SLMs) and Large Language Models (LLMs) is reshaping enterprise adoption. This article breaks down their architectural differences, cost-performance trade-offs, and real-world applications—helping you choose the right AI backbone for your business needs. Key Takeaways: ✅ When to use SLMs (efficiency, domain-specific tasks) vs LLMs (generalization, creativity) ✅ Hidden costs: Training, inference, and maintenance compared ✅ Future outlook: Will hybrid architectures dominate? For AI leaders, builders, and decision-makers—cut through the hype with actionable insights.

CORE AI & TECHNOLOGY

Rajeev Sharma, Founder | CEO - BhuviAI

7/19/20252 min read

The architectural differences between Small Language Models (SLMs) and Large Language Models (LLMs) primarily revolve around model size, computational requirements, training data, and use-case optimizations. Below is a detailed breakdown of their key distinctions:

1. Model Size & Parameters

SLMs (Small Language Models)

Typically have fewer than 10 billion parameters (often in the millions to low billions).

Examples:

Microsoft's Phi-1.5 (1.3B parameters)

Google’s Gemma-2B (2B parameters)

Meta’s Llama 2-7B (7B parameters)

Designed to be lightweight, making them suitable for edge devices (phones, IoT) or low-resource environments.

LLMs (Large Language Models)

Usually have tens to hundreds of billions of parameters (or even trillions for frontier models).

Examples:

OpenAI’s GPT-4 (~1.8T parameters rumored)

Google’s Gemini 1.5 (up to ~1T parameters)

Meta’s Llama 3-70B (70B parameters)

Built for high-performance tasks requiring deep reasoning, long-context understanding, and complex generation.

2. Training Data & Pretraining

SLMs

Trained on smaller, curated datasets (sometimes synthetic or high-quality filtered data).

Often use knowledge distillation (training smaller models to mimic larger ones).

Example: Microsoft’s Phi-3 was trained on "textbook-quality" synthetic data for efficiency.

LLMs

Trained on massive, diverse datasets (often petabytes of internet text).

Use web-scale scraping (CommonCrawl, GitHub, books, etc.).

Benefit from self-supervised learning (predicting next token in a sentence).

3. Architectural Optimizations

(A) Model Depth & Width

SLMs

Fewer layers (e.g., 12–24 transformer layers) and narrower hidden dimensions.

Use efficient attention mechanisms (e.g., sliding window attention, sparse attention).

Example: Mistral-7B uses grouped-query attention (GQA) for faster inference.

LLMs

Deeper networks (e.g., 60–120+ transformer layers) with wide hidden states (e.g., 8192+ dimensions).

Use dense attention (full self-attention) or mixture-of-experts (MoE) (e.g., GPT-4).

(B) Memory & Compute Efficiency

SLMs

Optimized for low-memory footprint (can run on CPUs or mobile GPUs).

Use quantization (e.g., 4-bit or 8-bit weights) for efficiency.

Example: Phi-2 runs efficiently on a single GPU.

LLMs

Require high-end GPUs/TPUs (e.g., H100 clusters) for training/inference.

Use model parallelism (tensor/pipeline parallelism) to distribute workload.

4. Use Cases & Deployment

SLMs

Edge AI: On-device chatbots (e.g., Microsoft’s Phi-3 for smartphones).

Specialized tasks: Code completion (StarCoder 3B), medical QA.

Cost-effective inference: Lower latency, cheaper API costs.

LLMs

General-purpose AI: ChatGPT, Claude, Gemini.

Complex reasoning: Advanced math, legal analysis, long-form writing.

Enterprise applications: Custom fine-tuning for big corporations.

5. Fine-Tuning & Adaptability

SLMs

Easier to fine-tune on small, domain-specific datasets.

Used in federated learning (privacy-preserving training on edge devices).

LLMs

Require massive compute for fine-tuning (LoRA, QLoRA help reduce cost).

Often fine-tuned via reinforcement learning from human feedback (RLHF).

6. Performance Trade-offs

Feature level differentiations

Parameters : SLMs < 10 B | LLMs > 10B–1T+

Training Data : Smaller, curated | Web-scale, diverse

Hardware : CPUs, mobile GPUs | GPU/TPU clusters

Latency : Low (real-time) | High (requires batching)

Accuracy : Good for narrow tasks | SOTA in general tasks

Cost : Cheap to run | Expensive API/cloud costs

Conclusion

SLMs are optimized for efficiency, speed, and edge deployment, sacrificing some generality.

LLMs prioritize maximum capability but require heavy infrastructure.

Trend: Hybrid approaches (e.g., Mixture-of-Experts, quantization) are blurring the lines between SLMs and LLMs.

🔗 About BhuviAI

At BhuviAI Solutions, we specialize in building scalable, open-source-driven AI toolchains and agent-based solutions. This visualization is part of our effort to make AI more explainable, composable, and usable across industries.

For collaboration or advisory inquiries, reach out at;

📧 mail us at info@bhuviai.com

📲 call us at +91 99719 38001

🌐 visit us at www.bhuviai.com

Agentic AI Technology Solutions

Enterprise-grade AI platforms and solutions, from strategy to governed deployment.

Office Address

START A CONVERSATION

Plot-2 Sector-56 Gurugram Haryana, India 122011

+91-9971938001

© 2026. All rights reserved.

info@bhuviai.com

Share your use case or challenge with our AI experts.